Optimising Kubernetes deployment with local continuous development tooling

Something I’ve noticed through my work with Kubernetes is that it can be challenging to test things properly locally before deploying.

There are plenty of tools for testing things in continuous integration pipelines and as part of continuous deployment, but there aren’t many tools for increasing your confidence that your Kubernetes-based application (code and associated Helm charts, for example), will deploy properly without issues the first time.

I’ve seen people deploying to a dev cluster to test changes without first doing any checks locally. It’s a bit like Russian roulette, and the feedback loop is slow, especially if you need to first push a change to a repository and wait for CI workflows to complete, before you change is eventually deployed.

The local development workflow is set up well for running local unit tests, for example, and maybe running integration tests in a Docker Compose setup. There are also tools such as Kubeconform for linting and validating your manifests. But, when it comes to making sure your application will deploy onto Kubernetes without incident, things are less well defined.

This is where tools such as Skaffold, Tilt, Draft or Garden come in.

I won’t go into the pros and cons of each tool here. There are other blog posts for that, but I will discuss why I decided on Skaffold and go into the workflow and setup for Skaffold locally.

Skaffold is a tool from Google designed to enable “Easy and Repeatable Kubernetes Development”. It’s available open-source on GitHub and has extensive documentation available on its main website.

I decided to use Skaffold for my local Kubernetes development because it’s open-source, it’s lightweight, it’s easy to learn, it has the backing of Google who are essentially the creators of Kubernetes, it allows for running different types of tests against containers, and it enables continuous development by watching changes to your source-code in real-time and automatically building and deploying to your cluster.

You can install it using one of its IDE plugins or as a standalone binary. I decided to install using the Homebrew package as I am running macOS:

brew install skaffold

Once installed, you can browse to your application repository (such as this Kubernetes Example Application) and initialise the Skaffold config using a simple:

skaffold init

As long as you have the basics set up, such as your source code, Helm charts, and Dockerfile, it should create a simple skaffold.yaml configuration file, much like the following (for builder I selected Docker and for buildpacks I selected go.mod):

apiVersion: skaffold/v4beta13

kind: Config

metadata:

name: kubernetes-example-application

build:

artifacts:

- image: ghcr.io/gawbul

docker:

dockerfile: Dockerfile

deploy:

helm:

releases:

- name: kubernetes-example-application

chartPath: helm-chart

valuesFiles:

- helm-chart/values.yaml

version: 0.0.1

This would allow you to build an image for the relevant application based on the Dockerfile and deploy it using Helm to your Kubernetes cluster.

You could very simply run the following to build the image and deploy to your cluster:

skaffold run

However, there are a few things that aren’t considered here.

- The image references GitHub Container Registry. We need to push the image to the registry and also set up

imagePullSecretsto ensure the cluster can pull the image down (otherwise we’ll end up withImagePullBackOff). - The image value isn’t set properly (it only includes the registry and the owner, and not the actual image name).

- The values file is pointing at the Helm chart defaults, which may not include all the values we need.

This post won’t go into setting up your cluster to access GitHub Container Registry, although see the README here for a guide on how to set up KinD locally with all the relevant configuration.

A more appropriate skaffold.yaml to use once you have everything set up would be the following:

apiVersion: skaffold/v4beta13

kind: Config

metadata:

name: kubernetes-example-application

build:

platforms: ["linux/arm64"]

artifacts:

- image: ghcr.io/gawbul/kubernetes-example-application

docker:

dockerfile: Dockerfile

buildArgs:

TARGETOS: linux

local:

push: true

deploy:

helm:

releases:

- name: kubernetes-example-application

chartPath: helm-chart/

valuesFiles:

- environments/common.values.yaml

- environments/dev.values.yaml

setValueTemplates:

global.image.registry: "{{.IMAGE_REPO_ghcr_io_gawbul_kubernetes_example_application}}"

global.image.tag: "{{.IMAGE_TAG_ghcr_io_gawbul_kubernetes_example_application}}@{{.IMAGE_DIGEST_ghcr_io_gawbul_kubernetes_example_application}}"

test:

- image: ghcr.io/gawbul/kubernetes-example-application

structureTests:

- './structure-tests/*'

verify:

- name: kubernetes-example-application-health-check

container:

name: kubernetes-example-application-health-check

image: alpine/curl:8.14.1

command: ['curl']

args: ['-s', 'http://kubernetes-example-application.default.svc.cluster.local:8080']

executionMode:

kubernetesCluster: {}

This is based on the structure of the Kubernetes Example Application repository linked above.

It does a few things differently:

- It sets the target platform as

linux/arm64as I’m running on a MacBook Pro with Apple silicon. - It adds a

TARGETOSbuild-arg based on the setup in the Dockerfile for multi-platform builds. - It sets the local build argument

pushtotrueso that we build and push the image to the registry, rather than just storing it in the local build cache. - It adds separate values files, including a common values file with values relevant to all environments, and an environment-specific

devvalues file with values specific to thedevenvironment. - It uses

setValueTemplatesto override and inject the registry and tag into the values files, so that we can build dynamic image tags and have Skaffold be able to automatically reference them. - It adds a

testsection with a reference to Container Structure Tests used for validating the built image. - It adds a

verifysection, which creates an ad-hoc container used to verify that the application has been deployed correctly.

Now, when we do a skaffold run it builds the image locally (if it doesn’t already exist) and pushes the image to GitHub Container Registry, it then runs the container structure tests to ensure the image was built correctly, before finally deploying the application to the Kubernetes cluster using Helm.

If you followed the guide above using a clone of the repository, you should then be able to do the following:

curl -k https://kubernetes.localhost:8443/kubernetes-example-application

Which returns the following:

Hello from Kubernetes!%

You can then verify the deployment using:

skaffold verify

Which should return the following:

Loading images into kind cluster nodes...

Images loaded in 41ns

[kubernetes-example-application-health-check] Hello from Kubernetes!

The steps above have allowed you to confirm that your image has been built correctly and is structured in the manner you expect, as well as verifying that it has been deployed and is running correctly in your Kubernetes cluster.

The test step can test various components of your image (see the README for more details of the different tests), but in this case is just using base file existence and metadata tests:

schemaVersion: 2.0.0

fileExistenceTests:

- name: 'app directory should not exist'

path: '/app'

shouldExist: false

- name: 'main binary should exist'

path: '/main'

shouldExist: true

metadataTest:

cmd: ['/main']

entrypoint: []

labels:

- key: 'org.opencontainers.image.source'

value: 'https://github.com/gawbul/kubernetes-example-application'

- key: 'org.opencontainers.image.description'

value: 'Kubernetes Example Application'

- key: 'org.opencontainers.image.title'

value: 'Kubernetes Example Application'

exposedPorts: ['8080']

user: ''

volumes: []

workdir: '/'

This checks that an /app directory doesn’t exist (this should only exist in the build step), checks that a /main binary exists, and checks various elements of the image metadata, including the labels and exposed ports. All very useful checks for ensuring your image is structured as it should be.

To remove the resources from your local cluster once you’re happy, you just need to do the following:

skaffold delete

To build and test a standalone image, you can do the following:

skaffold build --filename=skaffold.yaml --file-output=build.json

skaffold test --build-artifacts=build.json

The build.json is structured as follows:

{

"builds": [

{

"imageName": "ghcr.io/gawbul/kubernetes-example-application",

"tag": "ghcr.io/gawbul/kubernetes-example-application:0a91c77@sha256:5df4b0c92b7435cfcd0d9be9d9ed5a095284882c07345fd895884c9fb61e2cdf"

}

]

}

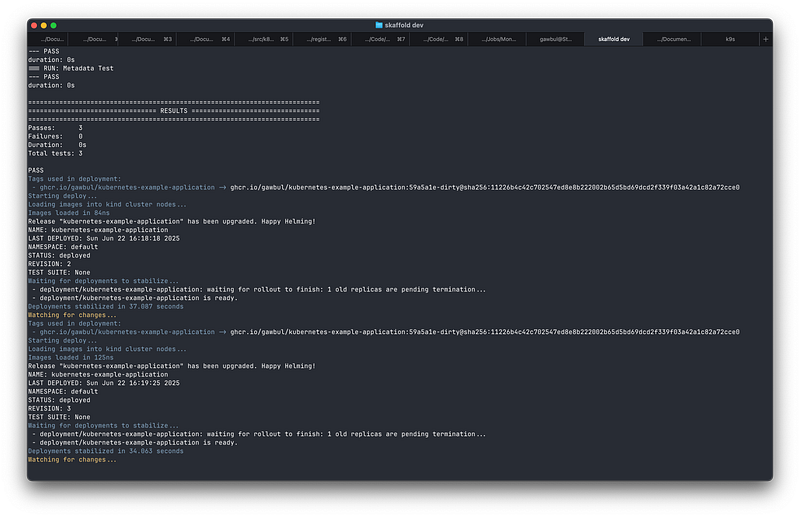

One of the most powerful pieces of functionality of Skaffold, however, is the ability to test changes to your code and Helm charts in real-time.

You can run the following command:

skaffold dev

This does the same as skaffold run but adds a control loop at the end, which watches your application for changes. You can then update the source code for your application or the associated Helm charts, and it automatically rebuilds the image, and/or redeploys the Helm chart as appropriate. Once you’re finished, you just hit Ctrl+C and it automatically cleans up for you.

This is valuable when you’re trying to iterate on components of your application to debug issues with your code or deployment, for example.

Skaffold isn’t just limited to single repository deployments either. If you have a selection of microservices that integrate together, you can use Skaffold to test your changes in one application alongside the others.

For example, you might have the following skaffold-all.yaml file:

apiVersion: skaffold/v4beta13

kind: Config

metadata:

name: kubernetes-micro-services

requires:

- configs: ["kubernetes-micro-service-one"]

git:

repo: [email protected]:gawbul/kubernetes-micro-service-one.git

path: skaffold.yaml

ref: main

- configs: ["kubernetes-micro-service-two"]

git:

repo: [email protected]:gawbul/kubernetes-micro-service-two.git

path: skaffold.yaml

ref: main

- configs: ["kubernetes-micro-service-three"]

git:

repo: [email protected]:gawbul/kubernetes-micro-service-three.git

path: skaffold.yaml

ref: main

build:

local:

push: true

artifacts:

- image: ghcr.io/gawbul/kubernetes-micro-service-four

docker:

dockerfile: Dockerfile

deploy:

helm:

releases:

- name: kubernetes-micro-service-four

chartPath: helm-chart/

valuesFiles:

- environments/common.values.yaml

- environments/dev.values.yaml

setValueTemplates:

global.image.registry: "{{.IMAGE_REPO_ghcr_io_gawbul_kubernetes_micro_service_four}}"

global.image.tag: "{{.IMAGE_TAG_ghcr_io_gawbul_kubernetes_micro_service_four}}@{{.IMAGE_DIGEST_ghcr_io_gawbul_kubernetes_micro_service_four}}"

test:

- image: ghcr.io/gawbul/kubernetes-micro-service-four

structureTests:

- './structure-tests/*'

verify:

- name: kubernetes-micro-service-one-health-check

container:

name: kubernetes-micro-service-one-health-check

image: alpine/curl:8.14.1

command: ['curl']

args: ['-s', 'http://kubernetes-micro-service-one.default.svc.cluster.local:8000/health']

executionMode:

kubernetesCluster: {}

- name: kubernetes-micro-service-two-health-check

container:

name: kubernetes-micro-service-two-health-check

image: alpine/curl:8.14.1

command: ['curl']

args: ['-s', 'http://kubernetes-micro-service-two.default.svc.cluster.local:8001/health']

executionMode:

kubernetesCluster: {}

- name: kubernetes-micro-service-three-health-check

container:

name: kubernetes-micro-service-three-health-check

image: alpine/curl:8.14.1

command: ['curl']

args: ['-s', 'http://kubernetes-micro-service-three.default.svc.cluster.local:8002/health']

executionMode:

kubernetesCluster: {}

- name: kubernetes-micro-service-four-health-check

container:

name: kubernetes-micro-service-four-health-check

image: alpine/curl:8.14.1

command: ['curl']

args: ['-s', 'http://kubernetes-micro-service-four.default.svc.cluster.local:8003/health']

executionMode:

kubernetesCluster: {}

This pulls in the Skaffold config from three separate micro-service repositories and allows you to build your current application alongside it to run integration and end-to-end tests if you wish.

Additionally, Skaffold can use any local kubeconfig to access clusters that may not be local. So, if you have access to a remote GKE or EKS cluster for example, then you can use it to deploy there too. This is especially useful if your remote cluster is configured in a way that might be more difficult to replicate locally, such as if there are specific network policies or Kyverno policies in place.

In order to visually validate that your application(s) have been deployed you can use kubectl to inspect the pods for example. However, one of the things the Skaffold is lacking that tools such as Tilt and Garden have, is a UI. This wasn’t something that bothered me, and the licensing implications meant that Skaffold was even more attractive.

If you want a UI to visualise your resources then there are plenty available. Two that I rate highly are k9s and Headlamp.

So, if you want to improve the speed of development when it comes to Kubernetes, I thoroughly recommend you check out Skaffold and spend some time learning about it’s capabilities. I’ve only touched the surface here. Check out the website for much more detail on what it can do!